Tag Archives for " Linux "

Linux: Monitoring resources – CPU

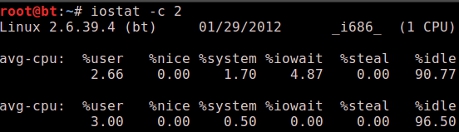

In this post on the Linux resources series we are going to talk about monitoring our CPU usage. When everything slows down this might be a good first suspect to check, you can use the iostat command with the -c option to see current CPU load, the second parameter specifies the time interval in seconds between each report. If you don’t have iostat you will need to install the sysstat package.

This shows a breakdown of the current CPU activity, for a description of what each value means check the man page for iostat.

Using top

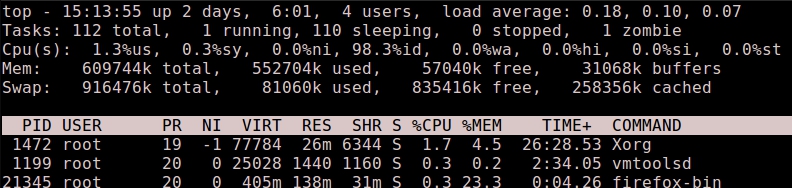

If we want to know which process are using most of the CPU we can use the top command, which will show us information about what’s going on in our system including: running process, cpu, memory usage, uptime…

It will automatically refresh every 3 seconds and it is sorted by the %CPU column by default, you can sort by a different column by pressing the F (capital f) key and then the corresponding key for the column you want to sort by. You can also kill a process directly from top pressing k and then the pid of the process.

One detail that is relevant for CPU usage on top and from the uptime command is the load average (sometimes shortened as loadavg), this gives us an idea on how busy our CPU is over time. There is three numbers for load average, they represent the loadavg of the last 1min, 5min, and 15min, so how does this load average work? Well, this value represents the number of process that are ready to run by the cpu but that are waiting on the queue because the cpu is busy executing some other process, every CPU or core in our system can process 1 unit of work so if we have only a single core CPU and our load average is constantly under 1.0 we are ok, but if the load average is constantly over 1.0 it means that our CPU has more work than it can handle. For a more detailed explanation of this subject head over here.

Linux: Monitoring resources – Disk Space

Continuing with our series on Linux resources we are now going to check our disk space. To get a listing of mounted partitions with their size and used space you can use the df -h command, the -h meaning human-readable size format (MBs/GBs), also we can get rid of the virtual filesystems info using grep.

Ok, so we may be running out of disk space, how can we check which are the biggest culprits?

Using the du command

You can use du with the -s option for a summary view (instead you would get the size of every file) and if you have no idea where to start looking then you can start with the root dir (it may take some time so it should be better do it at off hours). If you have network mounted devices or some other dir you want to ignore you can use the –exclude option.

If you want to get sorted output from du, sort can do this since coreutils 7.5 using the -h option.

Other Linux disk space tools

There is another tool called ncdu which basically does the same as du but it uses a ncurses based interface and let’s you navigate through the results and sort them, on ubuntu based distros it’s just an apt-get away.

If you prefer a GUI application you can try with Baobab, which is gnome based or filelight for the KDE guys.

Linux: Monitoring resources – Memory

I’m starting this series of post about Linux monitoring, so you will learn how to check your system resources (ram, cpu, disk). This post starts talking about memory.

You can check memory information using the free command, passing the -m option you will get the output in MBs, let’s see the output from my backtrack VM and discuss its meaning.

What we get here on the first line is our total memory (minus some that is reserved by the kernel), memory used and free memory. Now don’t freak out when you see this value is very low, it’s completely normal, to understand this let’s move on the second line: buffers/cache.

We can see 2 values here: buffers and cache. Buffers is in the ‘used’ column and it represents the actual memory used by running programs. The cache is mainly filled with cached data from disk, this is done to speed up the access to recently used data since it will be in RAM instead of having to load it from disk. The trick here is that the operating system will let go of the cached data to make room for other needs, so we could say that all the cached memory is available memory (the free column on the second line) in fact the System Monitor application in gnome takes these values directly to show used and available memory.

Now it’s time for a little experiment to see cached memory in action, we will use the time command to measure how long it takes for a command to complete, in this case I decided to use ls with recursive option. What we are going to see is the difference between uncached and cached load speed.

root@bt:~# time ls -R /opt > /dev/null

real 0m14.516s

user 0m0.320s

sys 0m0.436s

root@bt:~# time ls -R /opt > /dev/null

real 0m0.506s

user 0m0.344s

sys 0m0.080s

As you can see the results are pretty dramatic.

Finally the last line is our Swap usage, this is the dedicated partition we make at install time to help our RAM when it’s getting low, also memory from applications that haven’t been used in a while or when the system needs more ram (for example, to launch a new application) when this happens it’s said that the data is swapped out to disk, to temporarily free some physical ram for applications that you are actually using, when the data from the Swap is needed it’s just brought back to ram (swapped in)

Hope you enjoyed this, even if you didn’t I would like to get some feedback so I know what can be improved, thanks for reading!

Update: Check out this page for more disk cache examples => http://www.linuxatemyram.com/play.html

The tree command

With the tree command in Linux you can get a tree representation of a directory structure. Without any arguments it will start of the current dir and recursively go into each subdir to show a complete hierarchy.

tree

.

├── 1

│ ├── 44

│ ├── aa

│ ├── bb

│ └── ff

└── 2

├── cc

└── dd

3 directories, 5 files

This is just some dirs and files I made for testing, but if you run this on a real directory you will get a lot of output, to avoid this you can use the -L option to limit the depth.

tree -L 1

.

├── 1

└── 2

Well that’s a bit better, you can also get other useful information like permissions using the -p option:

# tree -p

.

├── [drw-r-----] 1

│ ├── [drwxr-xr-x] 44

│ ├── [-rw-r--r--] aa

│ ├── [-rw-r--r--] bb

│ └── [-rw-r--r--] ff

└── [drwxr-xr-x] 2

├── [-rw-r--r--] cc

└── [-rw-r--r--] dd

Another useful one is -u to show the owners of the files:

# tree -u

.

├── [root ] 1

│ ├── [root ] 44

│ ├── [matu ] aa

│ ├── [matu ] bb

│ └── [matu ] ff

└── [root ] 2

├── [root ] cc

└── [root ] dd

Other options that can come in handy are -d to show only dirs, and -s to show the size of files, but I will leave these to try on your own.

Intro to Awk

Awk is the ideal tool for most of your output processing/formatting needs. We can use it to carefully select and reformat data fields from stdin or a file and even do stuff like using conditions for what the value must be to print it or not. In fact awk is a programming language in itself, but don’t worry too much about that.

We are going to see an example of how we can print the first and second field of a comma separated list. To start with we will need to tell awk how it should split the fields, in this case by a comma. The option you have to use is -F “field_separator”, so in this case it would be -F ,

I’m going to use a output file from a metasploit module as an example.

|

1 2 3 4 5 6 7 8 9 |

awk -F , '{ print $4 "\t" $10 }' .msf4/logs/scripts/winenum/WINXP-95C9409AB_20110828.5732/wmic_useraccount_list.csv Disabled Name FALSE Administrator TRUE Guest TRUE HelpAssistant TRUE FALSE test FALSE winxpPro |

We use the print statement which executes once per line of input, notice how it goes between brackets and single quotes or it won’t work, then we specify the field number using a dollar sign, so for field 4 the field variable is $4. Finally, if we want to have our own text or even a tab or newline we need to enclose it between double quotes.

Using conditions

As a second example let’s split /etc/passwd and print the whole line for those users with a uid greater than 1000, for this example we will need to use an if statement and split on colon.

|

1 2 3 4 |

awk -F : '{ if ($3 > 1000) print $0 }' /etc/passwd nobody:x:65534:65534:nobody:/nonexistent:/bin/sh matu:x:1001:1001::/home/matu:/bin/bash |

In this example we print the whole line using $0, only for the lines where the third column (the user id) is greater than 1000.

More awk examples

Print three aligned columns: uid, user name, shell.

|

1 |

awk -F":" '{ printf "%-6d \t %-22s \t %s\n", $3, $1, $7 }' /etc/passwd | sort -n |

Add line numbers.

|

1 |

awk '{ print NR "\t" $0 } END { print "\n Number of lines: " NR }' /etc/passwd |

Find and print the biggest UID.

|

1 |

awk -F: '{ if($3 > max) max = $3; } END { print max }' /etc/passwd |

There is a lot more you can do with Awk, one good place to look at is over here.